Table of Contents

ToggleIs your website getting the visibility it deserves? If search engines can’t crawl your site, you’re missing out on valuable traffic. Crawlability is a key part of SEO, ensuring search engines can access and index your pages. Without it, even great content may never be seen.

But don’t worry—testing and improving crawlability doesn’t have to be complicated. With tools like Google Search Console, Screaming Frog SEO Spider, and bulk indexing checks, you can quickly identify and fix crawlability issues.

In this guide, we’ll walk you through the best methods to ensure your site is fully crawlable, from basic URL inspection to advanced SEO tools.

What Does It Mean for a Website to Be Crawlable?

Before getting into the nitty-gritty, let’s clarify what it means for a website to be crawlable. Simply put, when a website is crawlable, search engine bots, like Googlebot, can easily navigate and read the pages on your site.

Why Is Crawlability Important for SEO?

Crawlability is foundational for SEO. Without it, even the most well-designed website won’t show up in search results. Search engine crawlers are responsible for discovering new content and updates on your website. If they can’t reach your pages, they can’t index them, meaning your site remains invisible to search engines.

What Is Crawlability in SEO?

Crawlability refers to the ability of search engines to access and explore the pages on your website. This process is essential for ensuring that search engines understand your content and rank it accordingly in search results.

How Search Engine Crawlers Work

Search engines use bots (or crawlers) to navigate through the web. These bots move from one page to another by following links. If your pages aren’t crawlable, these bots won’t be able to find them, leaving those pages out of search engine rankings.

Get Those Spiders!

Improve your site’s crawlability with Go SEO Monkey and make sure search engines don’t miss you.

Crawlability vs. Indexability

While crawlability refers to the ability of search engines to access your website, indexability refers to whether the search engine chooses to include a page in its database. Just because a page is crawlable doesn’t mean it will be indexed.

If a crawler can’t access your page due to crawlability issues, it won’t be able to index it. This is why it’s vital to address crawlability problems before focusing on your site’s indexability.

Common Crawlability Problems

One of the most frustrating problems a website owner can face is poor crawlability. Let’s take a look at some common issues that may prevent your site from being fully crawlable.

Site Structure and Internal Links

Your website’s structure should be logical and simple. Search engine crawlers follow links to discover new pages, so if your internal links are hard to find or non-existent, crawlers may miss large parts of your website. A clear, well-organized site structure helps ensure that every page can be reached within a few clicks.

Non-HTML Links

Search engines prefer standard HTML links, as they are easier to crawl. If your website uses non-HTML links, such as JavaScript or Flash-based navigation, search engine crawlers may have trouble following them. This could lead to missing key pages, resulting in crawlability issues.

Server Errors

Server issues such as 500 Internal Server Errors or 404 Not Found can stop crawlers in their tracks. Regular server maintenance and monitoring are crucial to preventing these errors, ensuring that all pages on your site remain accessible to both users and search engines.

Redirects

Incorrectly implemented redirects can cause crawlers to get stuck in loops, hindering their ability to crawl your site effectively. Use 301 redirects for permanent URL changes and avoid redirect chains, which can consume your crawl budget and confuse search engines.

Sitemaps

A properly configured XML sitemap is essential for crawlability. Sitemaps help search engines understand the structure of your website and find important pages more efficiently. If your sitemap is missing or incorrectly configured, crawlers may struggle to navigate your site.

Blocking Access

Sometimes, specific pages or entire sections of a website are accidentally blocked from search engine crawlers via robots.txt or meta tags. Ensure that these blocks are only in place for pages that you don’t want indexed, like internal admin pages.

Crawl Budget

Crawl budget refers to the number of pages search engines will crawl on your website within a specific timeframe. Large sites need to manage their crawl budget carefully to avoid having important pages overlooked. If crawlers waste time on less important pages, high-priority content may not be crawled.

How to Perform a Crawlability Test

Checking if your page is both crawlable and indexable is a critical part of your SEO strategy. A page may be crawlable but not indexable due to specific tags or settings that block it from appearing in search results. Here’s how you can verify both.

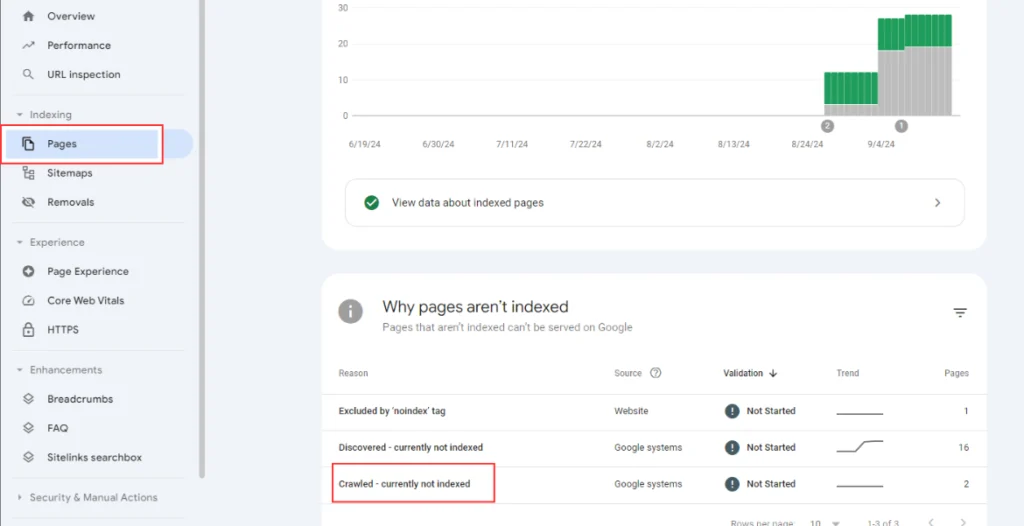

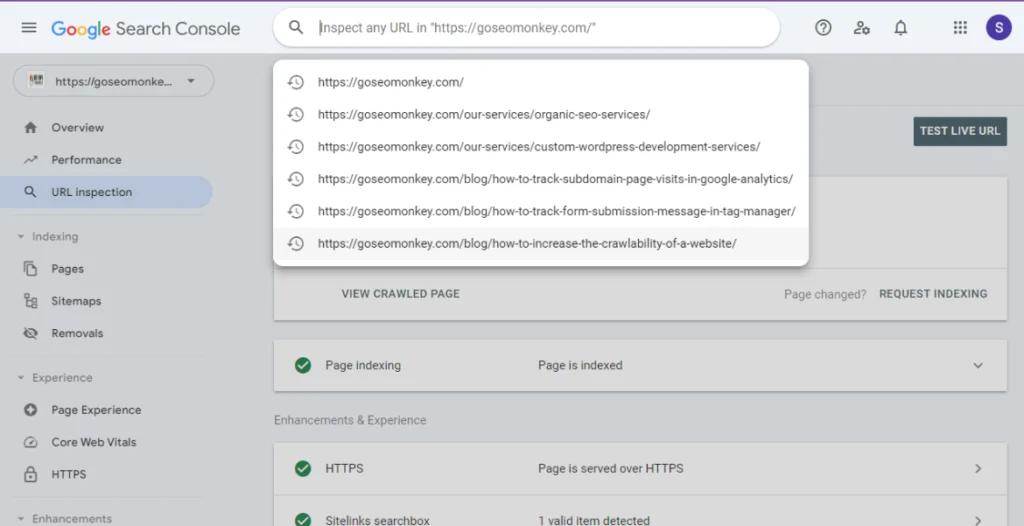

Method One: Via Google Search Console

Google Search Console offers an easy and comprehensive way to check if your page is crawlable and indexable:

- URL Inspection Tool: Simply paste the URL you want to check into the URL Inspection tool in Google Search Console. It will provide detailed information on crawlability and indexing status.

- Crawl Errors: This tool will also show you if there are any crawl errors or reasons why a page isn’t being indexed, such as being blocked by robots.txt or meta tags.

Google Search Console is beginner-friendly and should be your first stop when troubleshooting crawlability issues.

Method Two: Screaming Frog SEO Spider

For a more in-depth analysis of crawlability across your site, Screaming Frog SEO Spider is an excellent tool. Here’s how you can use it:

- Crawl the Site: Enter your website’s URL into Screaming Frog, and it will perform a full crawl. It identifies issues like broken links, missing meta tags, noindex tags, and server errors.

- Check Indexability: Screaming Frog highlights which pages are indexable and flags any that are marked with noindex or have been blocked by robots.txt.

- Detailed Reports: The tool offers reports on status codes, redirects, and other factors that can affect crawlability and indexing.

Screaming Frog gives you the granularity needed to pinpoint even minor crawlability issues.

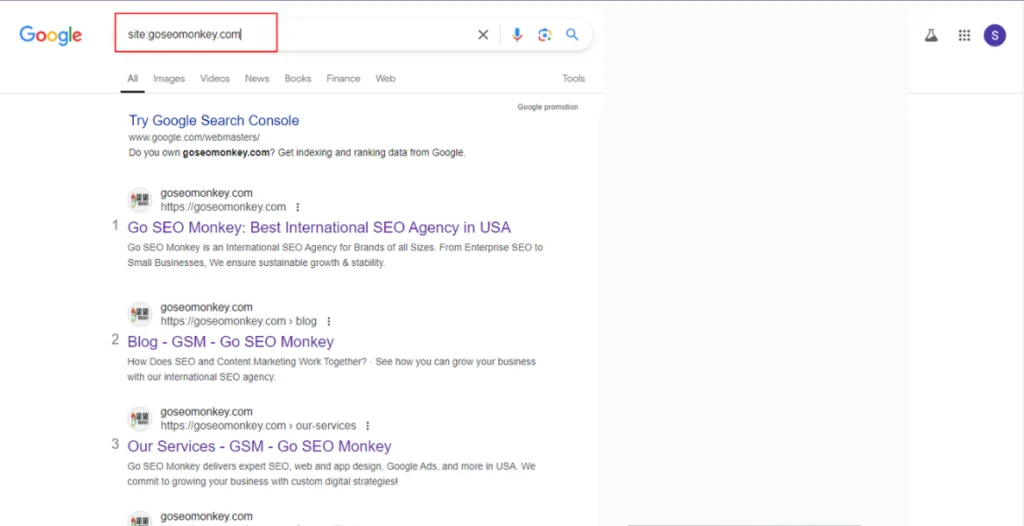

Method Three: Site Command in Google

Another quick method to check if a page is indexed is by using Google’s “site” command:

- Search for the URL: Type

site:yourdomain.com/page-urlinto the Google search bar. If your page appears in the results, it’s both crawlable and indexed. - Missing from Results: If the page doesn’t show up, it means Google either hasn’t crawled it or it’s not indexed. In this case, you’ll need to investigate further using other tools like Google Search Console or Screaming Frog.

This method gives a fast, on-the-fly check of your page’s indexation status.

Method Four: Bulk Check

For larger sites, checking crawlability and indexability for multiple pages individually can be time-consuming. Here are a few ways to check in bulk:

- Screaming Frog Bulk Crawl: You can crawl multiple URLs and review their crawlability and indexability status in a single report.

- Google Search Console Bulk URL Inspection: Google Search Console allows you to submit multiple URLs at once to check their crawl and index status.

- Third-Party Tools: Tools like Ahrefs and SEMrush offer bulk indexing checks to ensure multiple pages are both crawlable and indexed by search engines.

By using these bulk tools, you can save time and quickly identify crawlability issues across your entire site.

Need Help With Tools?

Leave it to us! We can help set up crawling and optimize every bit of your website.

How to Make Sure Your Website Is Crawlable

A comprehensive crawlability and indexing checklist will ensure that your website remains accessible and optimized for search engines.

Robots.txt File

- Ensure that the robots.txt file is correctly configured, allowing search engines to crawl all necessary parts of your website.

- Double-check that essential pages are not accidentally blocked.

XML Sitemap(s)

- Submit a properly formatted XML sitemap to Google Search Console.

- Ensure the sitemap is up to date and includes all crucial pages.

Site Structure

- Make sure your website’s structure is intuitive, with every page reachable within a few clicks.

- Use descriptive anchor text for internal links to help search engines understand the context of each link.

Orphaned Pages

- Orphaned pages (pages without internal links) are difficult for crawlers to find. Regularly audit your site to ensure all pages are connected via internal links.

Internal Links

- Strengthen your internal linking strategy to help search engines find and prioritize pages. Internal links should guide both users and crawlers through your website seamlessly.

Status Codes

- Regularly monitor your site for broken links and ensure that all pages return the correct 200 status code.

- Avoid 404 errors and server errors that block crawlability.

Noindex and Canonical Tags

- Review your noindex tags to ensure only irrelevant pages are excluded from search results.

- Implement canonical tags to handle duplicate content issues and direct crawlers to the correct version of each page.

Crawl Stats Report

- Use Google Search Console’s Crawl Stats report to track crawling activity and identify any slowdowns or missed pages.

- Look for patterns in crawl errors and resolve them quickly to maintain crawlability.

How to Improve Crawlability

Improving crawlability is essential for optimizing your website’s performance in search results. Here are some key steps to enhance crawlability:

- Optimize Your Robots.txt File: Double-check your robots.txt file to ensure that important pages are not being blocked. Allow crawlers access to vital content and ensure your file is well-structured.

- Update and Submit Your XML Sitemap: Ensure your sitemap.xml is current and submit it to Google Search Console. A clear and concise sitemap will help search engines navigate your website more efficiently.

- Improve Site Structure: Maintain a logical and user-friendly site structure with clearly defined categories and subcategories. This will make it easier for crawlers to access all of your content.

- Fix Orphaned Pages: Use internal links to connect orphaned pages to the rest of your website. Every page should be accessible within a few clicks from your homepage.

- Monitor Status Codes: Ensure that all pages return a 200 status code and that broken links are promptly fixed. This will prevent crawlers from getting stuck or missing important pages.

- Use Canonical Tags Correctly: Implement canonical tags on duplicate pages to point search engines to the original source. This helps avoid confusion and keeps your crawl budget from being wasted.

- Fix Redirects: Ensure that all redirects are properly implemented and avoid chains or loops. Use 301 redirects for permanent URL changes and audit them regularly.

- Monitor Crawl Budget: For large websites, managing your crawl budget is critical. Prioritize high-value pages in your sitemap and reduce crawl frequency for less important or rarely updated pages.

- Use Google Search Console: Regularly check your Crawl Stats and Coverage Reports in Google Search Console. These tools provide invaluable insights into your website’s crawlability and highlight areas for improvement.

How to Test Sitewide Crawlability

Testing your sitewide crawlability involves scanning your entire website for issues that could affect multiple pages. Here are the key steps:

- Run a Full Site Crawl: Use tools like Screaming Frog, SEMrush, or Ahrefs to crawl your entire site and generate a report on crawlability issues.

- Check Your Robots.txt File: Ensure that you’re not blocking any crucial sections of your site.

- Examine Your Sitemap: Verify that your XML sitemap includes all important pages and is free from errors.

- Analyze Your Redirects: Look for redirect chains or loops that could confuse search engines.

- Test for Server Errors: Identify and fix any server errors that might be blocking access to pages.

- Crawl Budget: Use Google Search Console’s Crawl Stats report to understand how often Google crawls your site and whether key pages are being overlooked.

How to Check if a Single Page is Crawlable

To ensure a specific page is crawlable, there are several methods:

- Google Search Console’s URL Inspection Tool: This tool allows you to see whether Google can crawl a particular URL and highlight any potential issues.

- Screaming Frog SEO Spider: This tool performs a deep crawl of your website, identifying crawlability problems like broken links or blocked pages.

- Manual Check: View the page’s source code and check the robots meta tag for “noindex” or “nofollow” attributes, which may prevent crawling.

By running these tests, you’ll have a clear understanding of whether a page is accessible to search engines.

What Is Crawl Budget and How it Affects Crawlability?

Crawl budget refers to the number of pages that a search engine will crawl on your website within a given timeframe. Larger websites need to manage their crawl budget carefully.

Prioritize your most important pages by linking them effectively and ensuring your less crucial pages don’t take up unnecessary crawl budget.

Conclusion

Ensuring that your site is easily accessible to search engine bots is crucial for indexing, ranking, and ultimately driving organic traffic. By addressing common crawlability issues—like poor site structure, server errors, or blocked pages—you can prevent your content from being overlooked by search engines.

But managing these aspects of SEO can be challenging, especially as your website grows. This is where professional help comes in.

At Go SEO Monkey, we specialize in improving crawlability and ensuring your website is fully optimized for search engines. From performing detailed crawlability tests to optimizing site structure and resolving technical issues, our team of experts can help your business achieve better visibility and rankings.

FAQs

- How do I know if my site is crawlable?

You can check your website’s crawlability using tools like Google Search Console or Screaming Frog. - What are common causes of crawlability issues?

Common causes include blocking pages in robots.txt, server errors, and misconfigured nofollow tags. - Can a site be crawlable but not indexed?

Yes, crawlability does not guarantee indexing. A site can be crawlable but may not be indexed due to poor content quality or other SEO issues. - How does Googlebot decide which pages to crawl?

Googlebot prioritizes pages based on internal linking, sitemap structure, and the importance of the content. - What is the impact of poor crawlability on SEO?

Poor crawlability prevents your pages from being indexed, which reduces your website’s visibility in search engine results.