Table of Contents

ToggleTo achieve strong search engine rankings, it’s essential to ensure that your links are crawlable. Crawlable links allow search engines to navigate your website and index its content effectively.

If your links are not easily accessible, search engines may miss important pages, which can negatively impact your site’s visibility and overall performance. Making your links crawlable is a fundamental part of a successful SEO strategy.

In this guide, we will explore the steps and best practices for optimizing your links to ensure they are fully crawlable, helping you enhance your website’s search engine presence and drive more traffic.

What are Web Crawlers?

What exactly is a web crawler? Think of web crawlers as digital librarians who traverse the internet, indexing and cataloging the content they find. They work by following links from page to page, gathering data to help search engines understand and rank your site.

So, how do these crawlers work their magic? They follow hyperlinks, process content, and assess site structure to determine how your pages should be indexed and ranked.

You are only a step away from skyrocketing your business!

Our Organic SEO experts can help optimize your website to generate leads like never before.

Why Links need to be Crawlable

Why should you prioritize making your links crawlable? If search engines can’t follow your links, they can’t index your pages. This means your content won’t appear in search results, potentially leading to decreased visibility and traffic.

Crawlable links are crucial for effective SEO, ensuring that your content is accessible to both search engines and users. Properly managed links can significantly enhance your site’s search engine ranking.

At its core, a crawlable link is one that a search engine crawler can easily follow and index. However, several common issues can affect link crawlability, such as broken links, incorrect link structure, or JavaScript-based navigation.

Ensuring your links are correctly formatted and accessible is essential for effective SEO.

How to Check if Your Links Are Crawlable

You might be wondering how to check if your links are crawlable. There are several tools and methods available:

- Google Search Console:

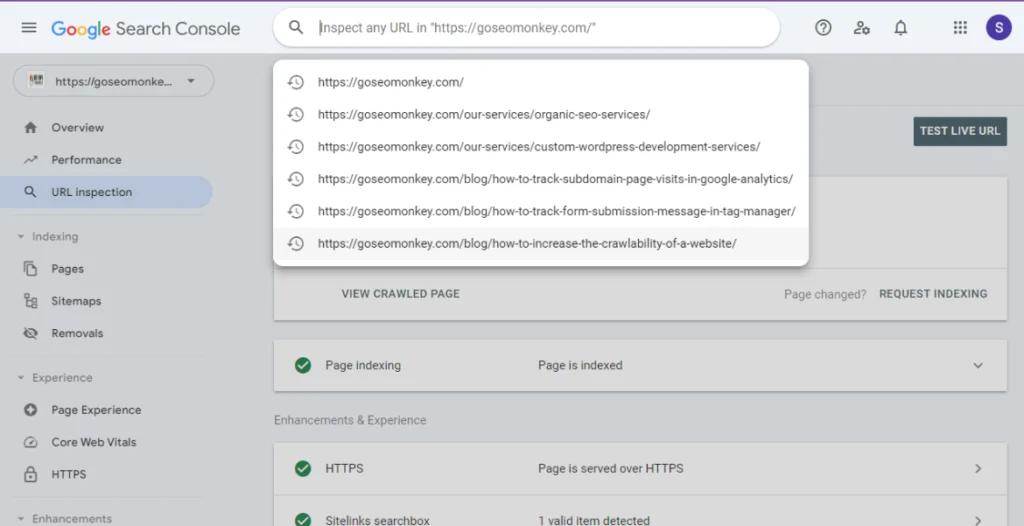

- URL Inspection Tool: Enter your URL in Google Search Console to see how Googlebot views your page. It shows if the page is indexed and highlights any issues.

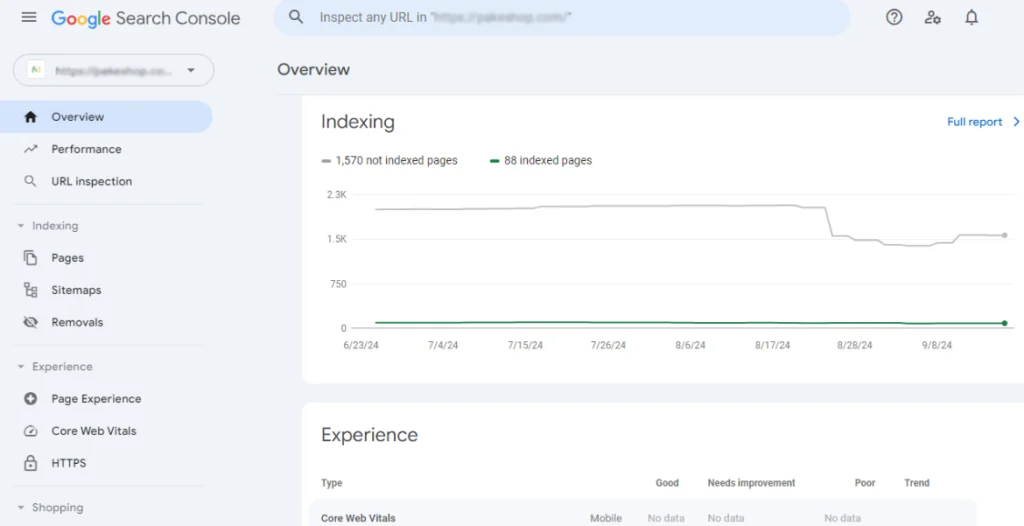

- Coverage Report: Check for crawl errors and issues with your site’s URLs.

You might be wondering how to check if your links are crawlable. There are several tools and methods available:

- Google Search Console:

- URL Inspection Tool: Enter your URL in Google Search Console to see how Googlebot views your page. It shows if the page is indexed and highlights any issues.

- Coverage Report: Check for crawl errors and issues with your site’s URLs.

Fixing Common Crawlability Issues

If you encounter crawlability issues, here’s how to address them:

Resolving Broken Links:

- Identify Broken Links: Use tools like Screaming Frog or Google Search Console to find broken links on your site.

- Update or Remove Links: Replace broken links with updated URLs or remove them if they no longer exist.

Dealing with “Crawlers Are Not Allowed to Access This Page” Errors:

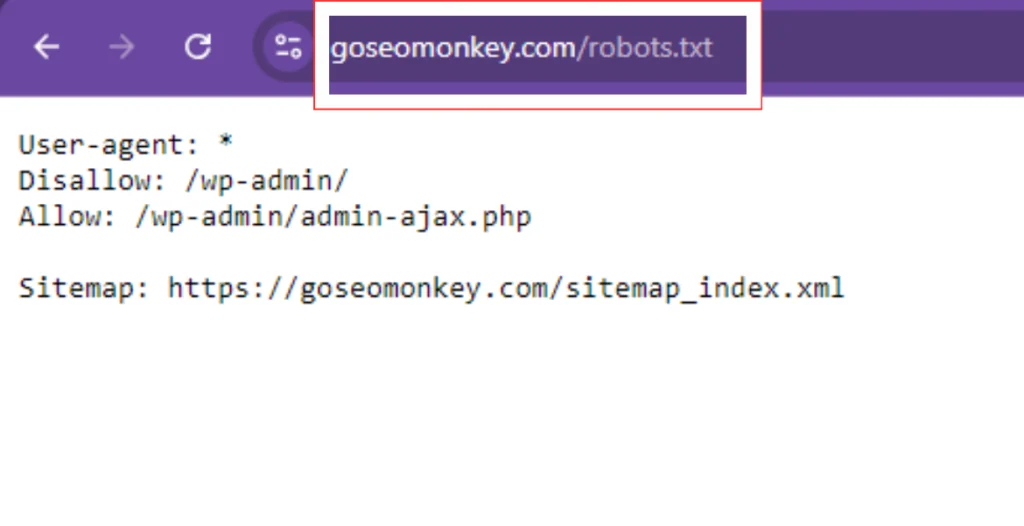

- Check Robots.txt File: Ensure your robots.txt file does not block important pages by reviewing the disallowed directives.

- Review Meta Tags: Verify that your pages do not have meta robots tags set to “noindex” or “nofollow,” which prevent indexing.

Managing Redirects Properly:

- Avoid Redirect Chains: Ensure redirects lead directly to the final destination without intermediate steps.

- Use 301 Redirects: Implement 301 redirects for permanent moves to pass full link equity and avoid 404 errors.

- Fix Redirect Loops: Identify and resolve any loops where redirects point back to each other, causing errors.

Advanced Techniques for Improving Link Crawlability

For more advanced optimization to improve crawlability, consider these techniques:

- Implementing XML Sitemaps

- What It Is: An XML sitemap is a file that lists all important pages on your site.

- How It Helps: It guides search engines to crawl and index your pages efficiently.

- Implementation: Create an XML sitemap using tools like Google Search Console or third-party plugins, and submit it to search engines.

- Utilizing Robots.txt File Effectively

- What It Is: A robots.txt file instructs search engines on which pages they can or cannot crawl.

- How It Helps: It helps manage crawler access and can prevent indexing of duplicate or irrelevant content.

- Implementation: Ensure important pages are not blocked and update the file to reflect changes in your site structure.

- Optimizing Internal Linking Structure

- What It Is: Internal linking involves connecting pages within your site to enhance navigation.

- How It Helps: It helps search engines discover new pages and understand the hierarchy of your site.

- Implementation: Use clear, descriptive anchor text for internal links and ensure all important pages are linked from other parts of your site.

Crawlability Testing Tools

Several tools can help you test and improve link crawlability:

- Screaming Frog SEO Spider

- Function: Crawls websites to identify SEO issues, including crawlability problems.

- Features: Checks for broken links, redirects, and meta tags; provides detailed reports.

- Usage: Enter your website URL and run the crawl. Review the data for any crawlability issues.

- Ahrefs Site Audit

- Function: Analyzes your website for SEO issues and crawlability.

- Features: Detects broken links, duplicate content, and crawl errors; offers recommendations for fixes.

- Usage: Submit your site URL and review the audit results for insights on improving crawlability.

- SEMrush Site Audit

- Function: Evaluates your site’s health, focusing on crawlability and SEO.

- Features: Identifies errors, broken links, and optimization opportunities; provides actionable recommendations.

- Usage: Enter your website URL and analyze the audit report to address any crawlability issues.

- Google Search Console

- Function: Monitors your site’s performance in Google search and identifies crawl issues.

- Features: Shows crawl errors, indexing status, and search performance; allows for URL inspection and request indexing.

- Usage: Access the “Coverage” and “URL Inspection” reports to check for and fix crawl issues.

- DeepCrawl

- Function: Comprehensive site crawler for SEO analysis and crawlability testing.

- Features: Detects technical issues, such as broken links and redirect chains; provides in-depth crawl data.

- Usage: Input your site’s URL and review the detailed crawl reports for optimization suggestions.

- Sitebulb

- Function: Visual site crawler that identifies crawl issues and provides insights.

- Features: Highlights broken links, duplicate content, and accessibility issues; offers visualizations of site structure.

- Usage: Run a crawl on your site and analyze the visual reports to address any identified problems.

Requesting Google to Crawl Your Links

If you’ve made significant changes to your site, you might want to request a crawl from Google. Here’s how:

Submit a Google Crawl Request:

- Use the URL Inspection Tool: Go to Google Search Console and enter the URL of the page you want to be crawled. Click on “Request Indexing.”

- Purpose: This prompts Google to check the page for updates and reindex it if necessary.

Best Practices for Requesting a Crawl:

- Ensure Page Quality: Make sure the page is free of errors, optimized, and complete before submitting.

- Use Sparingly: Only request a crawl for significant changes or new content, as frequent requests may not expedite the process.

Monitor the Status:

- Check Crawl Status: After requesting, monitor the page’s status in Google Search Console to see if the indexing request was successful.

Best Practices for Creating Crawlable Links

To make sure your links are crawlable, follow these best practices:

- Use Descriptive and Relevant Anchor Text: Instead of vague phrases like “click here,” use descriptive anchor text that provides context about the linked page. This helps crawlers understand the content of the destination page.

- Avoid JavaScript for Important Links: Search engines can struggle with JavaScript-based links. Whenever possible, use standard HTML links to ensure your important pages are accessible.

- Ensure Proper HTML Structure: Make sure your HTML is well-structured and valid. This includes using correct tags and nesting elements properly, which helps crawlers navigate your site more effectively.

Optimize Your Links and Improve Crawlability

Make sure your website is easy to crawl with GSM’s optimization services.

Conclusion

Ensuring your links are crawlable is essential for maximizing your website’s SEO potential. Crawlable links enable search engine bots to discover and index your content, which directly impacts your site’s visibility and search engine ranking.

Properly managed crawlability leads to better search engine performance, increased organic traffic, and a more user-friendly site.

If you want to enhance your website’s crawlability and overall SEO strategy, Go SEO Monkey offers expert services tailored to improve link crawlability for businesses. Their team can conduct thorough audits, fix crawl issues, and implement best practices to boost your site’s performance.

FAQs

- What is a link crawler?

A link crawler, or web crawler, is a tool used by search engines to index and analyze web pages by following links. - How can I tell if my links are crawlable?

Use tools like Google Search Console, Screaming Frog, or Ahrefs to check if your links are crawlable and identify any issues. - What should I do if my links are not crawlable?

Fix broken links, review your robots.txt file and meta tags, and ensure your HTML structure is correct. - How often should I check link crawlability?

Regularly check for issues, especially after major updates or changes to your site, to maintain optimal crawlability. - Can crawlability affect my website’s SEO performance?

Yes, crawlability directly impacts your SEO performance by determining how well search engines can index your content and rank your site.